These tutorials focus mainly on OpenGL, Win32 programming and the ODE physics engine. OpenGL has moved on to great heights and I don't cover the newest features but cover all of the basic concepts you will need with working example programs.

Working with the Win32 API is a great way to get to the heart of Windows and is just as relevant today as ever before. Whereas ODE has been marginalized as hardware accelerated physics becomes more common.

Games and graphics utilities can be made quickly and easily using game engines like Unity so this and Linux development in general will be the focus of my next tutorials.

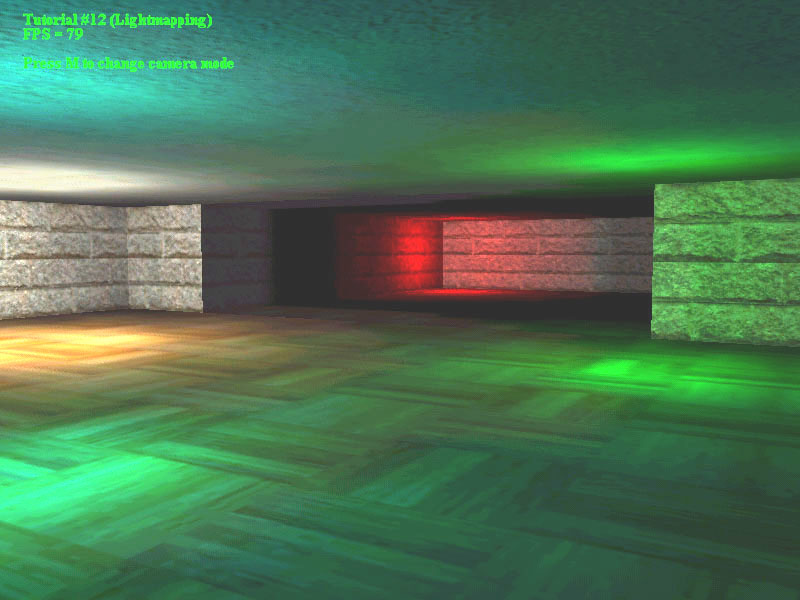

Lightmapping Tutorial

By Alan Baylis 19/12/2001

Lightmapping is still the preferred method of lighting in most games, namely because it is fast regardless of how many lights are in the scene, if you've ever shot at a light bulb that didn't break or it broke but the light around it remained then you have seen lightmaps in action (or lack of). They are not suitable for dynamic lighting, though slight variations to the light mapping theme can be applied to produce fake dynamic lights such as either on/off or flickering lights (normally by using multiple lightmaps.)

A lightmap is basically just a texture that contains luminance information rather than an image. The elements of the lightmap are referred to as lumels as they represent elements of luminosity. After the lightmap has been generated, the texture to be lit and the lightmap are blended together when applied to the polygon to produce the final effect. The blending can be pre-calculated before runtime to speed up the program, though the trend now is to use hardware multi-texturing.

Lightmaps are almost essential for any game engine these days, but I found that there is a lack of tutorials or clear source on the subject, so this tutorial and demo are provided to fill in the some of the gaps. This is my first attempt at creating lightmaps so there may be mistakes in the source or other ways of doing it.

Before we begin, note that the example code is compatible with the OpenGL API and deals only with triangles (I will still refer to them as polygons.)

The first step is to calculate the lightmap's UV coordinates. In some cases you could just use the texture's UV coordinates but this does not work in all cases, so to do this we use a process called planar mapping. In brief this means that we project the polygon's coordinates onto a primary axis plane and then convert the new coordinates to 2D texture space, leaving us with orthogonal UV coordinates that range between 0 and 1.

To project the polygon's coordinates onto a primary axis plane we must first determine which of the three planes to use. This is done by checking which component of the polygon's normal is the largest (using the absolute value), if the x component is largest we map onto the YZ plane, if the y component is largest we map onto the XZ plane, or if the z component is largest we map onto the XY plane. Now that we know which plane we want to map onto, we project the polygon's coordinates onto the plane by using the two relevant components of each vertex and dropping the third. In other words, if we are mapping onto the YZ plane we use the y and z components from each of the polygon's vertices and drop the x component.

In this example code, assume that we are making a lightmap for a single polygon with three vertices and have a lightmap struct that has UV coordinates for each vertex. I also set a flag to indicate which plane we are mapping onto which will be used later.

poly_normal = polygon.GetNormal();

poly_normal.Normalize();

if (fabs(poly_normal.x) > fabs(poly_normal.y) &&

fabs(poly_normal.x) > fabs(poly_normal.z))

{

flag = 1;

lightmap.Vertex[0].u = polygon.Vertex[0].y;

lightmap.Vertex[0].v = polygon.Vertex[0].z;

lightmap.Vertex[1].u = polygon.Vertex[1].y;

lightmap.Vertex[1].v = polygon.Vertex[1].z;

lightmap.Vertex[2].u = polygon.Vertex[2].y;

lightmap.Vertex[2].v = polygon.Vertex[2].z;

}

else if (fabs(poly_normal.y) > fabs(poly_normal.x) &&

fabs(poly_normal.y) > fabs(poly_normal.z))

{

flag = 2;

lightmap.Vertex[0].u = polygon.Vertex[0].x;

lightmap.Vertex[0].v = polygon.Vertex[0].z;

lightmap.Vertex[1].u = polygon.Vertex[1].x;

lightmap.Vertex[1].v = polygon.Vertex[1].z;

lightmap.Vertex[2].u = polygon.Vertex[2].x;

lightmap.Vertex[2].v = polygon.Vertex[2].z;

}

else

{

flag = 3;

lightmap.Vertex[0].u = polygon.Vertex[0].x;

lightmap.Vertex[0].v = polygon.Vertex[0].y;

lightmap.Vertex[1].u = polygon.Vertex[1].x;

lightmap.Vertex[1].v = polygon.Vertex[1].y;

lightmap.Vertex[2].u = polygon.Vertex[2].x;

lightmap.Vertex[2].v = polygon.Vertex[2].y;

}

We then convert these lightmap UV coordinates to 2D texture space. To do this we must firstly find the bounding box of these coordinates (by using the

minimum and maximum values) and also find the difference (delta) between these minimum and maximum values. Having done this, we can then make all the lightmap's UV coordinates relative to the origin by subtracting the minimum UV values from the UV coordinates and then scale by dividing by the delta values.

Min_U = lightmap.Vertex[0].u;

Min_V = lightmap.Vertex[0].v;

Max_U = lightmap.Vertex[0].u;

Max_V = lightmap.Vertex[0].v;

for (int i = 0; i < 3; i++)

{

if (lightmap.Vertex[i].u < Min_U )

Min_U = lightmap.Vertex[i].u;

if (lightmap.Vertex[i].v < Min_V )

Min_V = lightmap.Vertex[i].v;

if (lightmap.Vertex[i].u > Max_U )

Max_U = lightmap.Vertex[i].u;

if (lightmap.Vertex[i].v > Max_V )

Max_V = lightmap.Vertex[i].v;

}

Delta_U = Max_U - Min_U;

Delta_V = Max_V - Min_V;

for (int i = 0; i < 3; i++)

{

lightmap.Vertex[i].u -= Min_U;

lightmap.Vertex[i].v -= Min_V;

lightmap.Vertex[i].u /= Delta_U;

lightmap.Vertex[i].v /= Delta_V;

}

So now we have the lightmaps UV coordinates we can move onto calculating the lumels. We need two edges to interpolate with, and we can make these using the minimum and maximum UV coordinates we calculated, but project them back to the polygon's plane by using the plane equation Ax + By + Cz + D = 0.

Distance = - (poly_normal.x * pointonplane.x + poly_normal.y

* pointonplane.y + poly_normal.z * pointonplane.z);

switch (flag)

{

case 1: //YZ Plane

X = - ( poly_normal.y * Min_U + poly_normal.z * Min_V + Distance )

/ poly_normal.x;

UVVector.x = X;

UVVector.y = Min_U;

UVVector.z = Min_V;

X = - ( poly_normal.y * Max_U + poly_normal.z * Min_V + Distance )

/ poly_normal.x;

Vect1.x = X;

Vect1.y = Max_U;

Vect1.z = Min_V;

X = - ( poly_normal.y * Min_U + poly_normal.z * Max_V + Distance )

/ poly_normal.x;

Vect2.x = X;

Vect2.y = Min_U;

Vect2.z = Max_V;

break;

case 2: //XZ Plane

Y = - ( poly_normal.x * Min_U + poly_normal.z * Min_V + Distance )

/ poly_normal.y;

UVVector.x = Min_U;

UVVector.y = Y;

UVVector.z = Min_V;

Y = - ( poly_normal.x * Max_U + poly_normal.z * Min_V + Distance )

/ poly_normal.y;

Vect1.x = Max_U;

Vect1.y = Y;

Vect1.z = Min_V;

Y = - ( poly_normal.x * Min_U + poly_normal.z * Max_V + Distance )

/ poly_normal.y;

Vect2.x = Min_U;

Vect2.y = Y;

Vect2.z = Max_V;

break;

case 3: //XY Plane

Z = - ( poly_normal.x * Min_U + poly_normal.y * Min_V + Distance )

/ poly_normal.z;

UVVector.x = Min_U;

UVVector.y = Min_V;

UVVector.z = Z;

Z = - ( poly_normal.x * Max_U + poly_normal.y * Min_V + Distance )

/ poly_normal.z;

Vect1.x = Max_U;

Vect1.y = Min_V;

Vect1.z = Z;

Z = - ( poly_normal.x * Min_U + poly_normal.y * Max_V + Distance )

/ poly_normal.z;

Vect2.x = Min_U;

Vect2.y = Max_V;

Vect2.z = Z;

break;

}

edge1.x = Vect1.x - UVVector.x;

edge1.y = Vect1.y - UVVector.y;

edge1.z = Vect1.z - UVVector.z;

edge2.x = Vect2.x - UVVector.x;

edge2.y = Vect2.y - UVVector.y;

edge2.z = Vect2.z - UVVector.z;

Now that we have the two edge vectors, we can find the lumel positions in world space by interpolating along these edges using the width and height of the lightmap. The method I use to calculate the color of each lumel was found through trial and error but basically uses the Lambert formula to scale the RGB intensities, as soon I can find some info on this subject it will be improved. I also do a check of which side of the polygon the lights are on so that only the front facing polygons are lit.

for(int iX = 0; iX < Width; iX++)

{

for(int iY = 0; iY < Height; iY++)

{

ufactor = (iX / (GLfloat)Width);

vfactor = (iY / (GLfloat)Height);

newedge1.x = edge1.x * ufactor;

newedge1.y = edge1.y * ufactor;

newedge1.z = edge1.z * ufactor;

newedge2.x = edge2.x * vfactor;

newedge2.y = edge2.y * vfactor;

newedge2.z = edge2.z * vfactor;

lumels[iX][iY].x = UVVector.x + newedge2.x + newedge1.x;

lumels[iX][iY].y = UVVector.y + newedge2.y + newedge1.y;

lumels[iX][iY].z = UVVector.z + newedge2.z + newedge1.z;

combinedred = 0.0;

combinedgreen = 0.0;

combinedblue = 0.0;

for (int i = 0; i < numStaticLights; i++)

{

if (ClassifyPoint(staticlight[i].Position,

pointonplane, poly_normal) == 1)

{

lightvector.x = staticlight[i].Position.x - lumels[iX][iY].x;

lightvector.y = staticlight[i].Position.y - lumels[iX][iY].y;

lightvector.z = staticlight[i].Position.z - lumels[iX][iY].z;

lightdistance = lightvector.GetMagnitude();

lightvector.Normalize();

cosAngle = DotProduct(poly_normal, lightvector);

if (lightdistance < staticlight[i].Radius)

{

intensity = (staticlight[i].Brightness * cosAngle)

/ lightdistance;

combinedred += staticlight[i].Red * intensity;

combinedgreen += staticlight[i].Green * intensity;

combinedblue += staticlight[i].Blue * intensity;

}

}

}

if (combinedred > 255.0)

combinedred = 255.0;

if (combinedgreen > 255.0)

combinedgreen = 255.0;

if (combinedblue > 255.0)

combinedblue = 255.0;

lumelcolor[iX][iY][0] = combinedred;

lumelcolor[iX][iY][1] = combinedgreen;

lumelcolor[iX][iY][2] = combinedblue;

}

}

This next part takes the lumelcolor array and puts it into a RGBA format suitable for saving as your preferred image type.

for(int iX = 0; iX < (Width * 4); iX += 4)

{

for(int iY = 0; iY < Height; iY += 1)

{

lightmap[iX + iY * Height * 4] = (char)lumelcolor[iX / 4][iY][0];

lightmap[iX + iY * Height * 4 + 1] = (char)lumelcolor[iX / 4][iY][1];

lightmap[iX + iY * Height * 4 + 2] = (char)lumelcolor[iX / 4][iY][2];

lightmap[iX + iY * Height * 4 + 3] = 255;

}

}

In closing, a few things to note are:

The static lights contain red, green and blue values ranging from 0-255 as well as brightness and radius values. Balancing the brightness and radius is a manual task that can produce different effects; experiment with these depending on the light effect you want.

Contrary to intuition, a smaller lightmap makes for a better effect. If the lightmap is too large you will end up with very obvious rings of light rather than a smooth blend. A 16x16 sized lightmap does seem to be the best size

When two polygons of different sizes are placed edge to edge with a light shining on both of them, the light values either side of the edge do not match as we want. I am pretty sure that this occurs because the lightmap is scaled differently for each polygon, it isn't a problem when the lightmaps are larger and have more sample points (but we run into the above problem with rings of light.) One solution would be to make polygons that are touching have the same edge lengths but that would be tedious. I'm still working on a solution to this and would appreciate any thoughts on the subject

References: